- Future of Life Institute Newsletter

- Posts

- AI safety report cards are out. How did the major companies do?

AI safety report cards are out. How did the major companies do?

Plus: Update on EU guidelines; the recent AI Security Forum; how AI increases nuclear risk; and more.

Welcome to the Future of Life Institute newsletter! Every month, we bring 44,000+ subscribers the latest news on how emerging technologies are transforming our world.

If you've found this newsletter helpful, why not tell your friends, family, and colleagues to subscribe?

Today's newsletter is a ten-minute read. Some of what we cover this month:

📊 Our new AI Safety Index results

📝 SaferAI’s Risk Management Ratings

🇪🇺 Update on the Code of Practice

💬 DC AI Security Forum

And more.

If you have any feedback or questions, please feel free to send them to [email protected].

Report Cards Are Here… and No One Made Honour Roll

We’ve released our Summer 2025 AI Safety Index, providing an independent assessment of how leading AI companies are addressing critical safety and security challenges.

Following up on our December 2024 report, the updated index is based on evaluations by six independent AI safety experts, who assessed seven major AI companies: OpenAI, Anthropic, Meta, Google DeepMind, xAI, DeepSeek AI, and Zhipu AI.

As noted by reviewer Stuart Russell, the findings indicate some progress has been made, but with a long way left to go - especially since no company is currently meeting the level of rigour required to ensure AI systems are developed and deployed safely.

“Some companies are making token efforts, but none are doing enough… This is not a problem for the distant future; it’s a problem for today.”

Some key insights from the report:

The AI industry remains dangerously unprepared for the risks of the technology they’re creating.

AI capabilities are advancing faster than safety practices, with growing disparity between companies.

Only Anthropic, OpenAI, and Google DeepMind report meaningful testing of dangerous capabilities - particularly those linked to large-scale threats such as bioterrorism or cyberattacks.

Whistleblower protection policies and transparency remain underdeveloped across the board.

Anthropic earned the highest overall safety grade… though still just a C+.

OpenAI came in second overall, followed by Google DeepMind.

Chinese firms Zhipu AI and DeepSeek AI received failing grades.

Read the full report now, as covered by The Guardian, The Economist, TIME, Semafor, Bloomberg, and others, to explore detailed evaluations, methodology, and our recommendations for companies to improve their safety measures.

🇪🇺 A Win for Safe AI in Europe!

On July 10, a new milestone in AI governance was achieved with the release of the EU’s General-Purpose AI Code of Practice.

Written by 13 independent experts (including Yoshua Bengio, the world’s most-cited computer scientist) and based on consultation with 1,000+ stakeholders, the voluntary Code offers clear, flexible guidance for how AI companies can align with the EU AI Act, helping translate regulatory obligations into actionable safety measures. As of July 31, the majority of leading AI companies have signed on to the Code, including Anthropic, OpenAI, Google DeepMind, xAI, and Microsoft… with Meta a notable holdout.

Critically, the Code sets out actionable guidelines for how companies should address systemic risks such as AI deception or self-replication, including by assigning clear internal roles for oversight and risk monitoring, and by establishing a culture that supports whistleblowers.

We welcome these advances but note the Code lacks any mechanism for regular or emergency updates, which could threaten the relevance of some guidelines as AI development continues to speed up. However, we’re optimistic that the European Commission will proactively review and update the Code as needed, and we look forward to seeing it enforced to benefit the public, AI system deployers, and providers alike.

You can read our full statement in the post below:

🇪🇺👏 A historic milestone for AI governance!

Following consultation with 1,000+ stakeholders, a group of 13 independent experts - including the world's most-cited computer scientist @Yoshua_Bengio - have published the final version of the EU's General Purpose AI Code of

— Future of Life Institute (@FLI_org)

8:07 PM • Jul 11, 2025

What’s New at FLI?

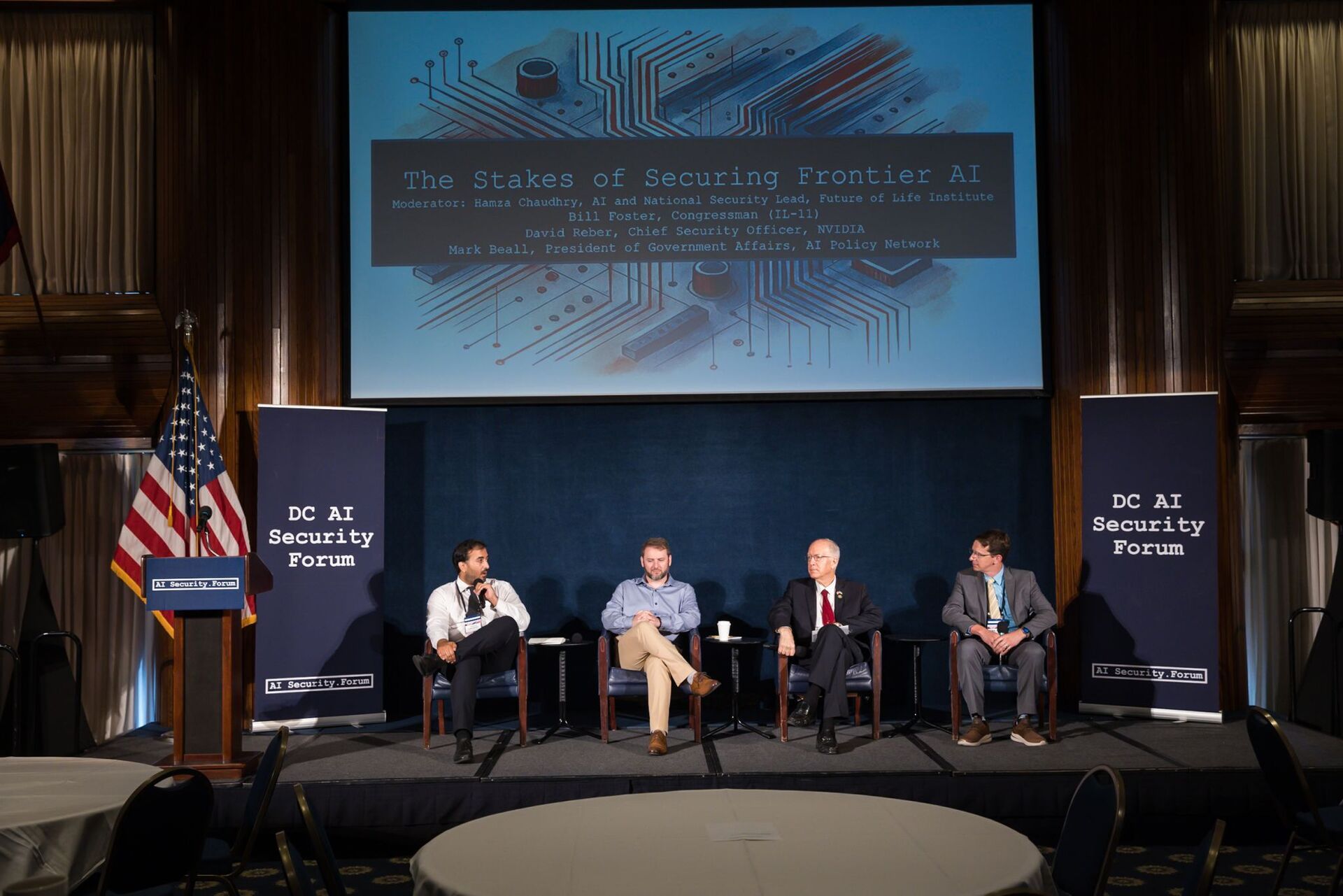

We helped organize the DC AI Security Forum, the largest gathering to date of policymakers, national security officials, and private sector leaders focused on securing AI systems. 100+ congressional and agency staffers, as well as dozens of technical experts, were in attendance for various panels and workshops.

With our friends at the Federation of American Scientists, we also co-hosted an AIxCyber roundtable in DC, bringing together academia, policy, and industry to discuss the future trajectory of interactions between AI and cyber risks.

FLI President Max Tegmark joined National Association of Evangelicals President Rev. Walter Kim for an NAE podcast episode about human wisdom in the age of AI and how we can navigate AI’s benefits and risks.

On National Whistleblower Day (July 30), we were proud to join the AI Whistleblower Initiative coalition of 30+ global organizations and experts calling on AI companies to publish their whistleblowing policies.

Together with the All Africa Conference of Churches, we co-hosted a two-day consultation in Nairobi with 25 church leaders from across Africa, discussing opportunities, risks, and challenges of AI development from the perspectives of faith leaders.

FLI’s Head of U.S. Policy, Michael Kleinman, spoke to Fast Company about the 99-1 Senate vote against the ban on state AI regulation: "AI companies will say [that] any regulation means there’s no innovation, and that is not true. Almost all industries in this country are regulated. Right now, AI companies face less regulation than your neighborhood sandwich shop."

FLI’s AI & National Security Lead, Hamza Chaudhry, joined CNN for a segment about the concerning AI-powered security breach targeting U.S. Secretary of State Marco Rubio:

"The surprising thing isn't that this has happened. It's surprising that it's taken this long... It was only a matter of time before state and non-state actors which wish to harm the United States woke up and said 'this is a key tool in our arsenal'."

FLI's AI & National

— Future of Life Institute (@FLI_org)

9:27 PM • Jul 10, 2025

On the FLI Podcast, host Gus Docker was joined by:

Daniel Kokotajlo, Executive Director of the AI Futures Project who co-wrote AI 2027, on why the AI race ends in ‘disaster’.

Anders Sandberg, Mimir Center Senior Research Fellow, on what comes after superintelligence.

Tom Davidson, Forethought Senior Research Fellow, on the growing threat of AI-enabled coups.

Calum Chace, keynote speaker on AI and Conscium co-founder, on how AI could replace humanity.

On Our Radar

Rating risk management: SaferAI released their new AI Risk Management Ratings, assessing 12 AI companies’ risk management practices across risk identification, risk analysis and evaluation, risk treatment, and risk governance. Similar to the findings from our new AI Safety Index, overall Anthropic came out on top with OpenAI in second place… although grades were still disappointing, finding weak to very weak risk management practices at all companies.

The only winning move is not to play: With the 80th anniversary of the Trinity Test in July, and the 80th anniversary of the devastating Hiroshima and Nagasaki atomic bombings following in August, we’re reminded of the enduring threat of a nuclear exchange and the immense human cost of nuclear weapons. Our friends at The Elders put together an informative post detailing how AI could heighten this risk:

🚩☢️6️⃣ Facts: AI & Nuclear Conflict Risks

As global conflict risks spread, the acceleration of unregulated AI could add further danger to the growing nuclear threat. Here’s why:

1️⃣ Faster proliferation: There is no global regulation limiting how and where AI is deployed. It

— The Elders (@TheElders)

9:01 AM • Jul 11, 2025

What we’re watching: Digital Engine released a new YouTube video showcasing the grave risks humanity faces as AI companies race ahead to build ever more powerful AI systems. Bonus points for the AI Safety Index shoutout!