- Future of Life Institute Newsletter

- Posts

- Congress Considers Banning State AI Laws... Again...

Congress Considers Banning State AI Laws... Again...

Plus: The first reported case of AI-enabled spying; why superintelligence wouldn't be controllable; takeaways from WebSummit; and more.

Welcome to the Future of Life Institute newsletter! Every month, we bring 65,000+ subscribers the latest news on how emerging technologies are transforming our world.

If you've found this newsletter helpful, why not tell your friends, family, and colleagues to subscribe?

Today's newsletter is a seven-minute read. Some of what we cover this month:

🏛️ Preemption updates

🚨 The first case of AI espionage

🔃 How superintelligence would absorb power

🎥 New videos to watch and share

And more.

If you have any feedback or questions, please feel free to send them to [email protected].

The Big Three

Key updates this month to help you stay informed, connected, and ready to take action.

→ Preemption pt. II: AI companies are pushing for U.S. Congress to sneak an 11th-hour clause into the National Defense Authorization Act (NDAA) that would override (“preempt”) current and future state-level regulations designed to ensure safe and responsible AI development. If this sounds familiar, just months ago the Senate voted 99-1 to throw out similar legislation banning state AI laws.

Strong, widespread opposition to AI preemption spans the political spectrum, from Ron DeSantis to Bernie Sanders, including over 260 state legislators behind this letter. The American public on both sides of the aisle reject preemption by a 3-to-1 margin. But despite this widespread resistance, the fight against it is not yet over. Blocking state-level AI legislation would strip states of their ability to tackle pressing AI-related concerns, including job loss, harm to children, surveillance, discrimination, loss of control, and more.

If you live in the U.S., you can contact your Representative now and tell them to oppose federal AI preemption in the NDAA with our quick and easy form here.

“All other companies are forced to meet basic safety standards, from airlines to pharmaceutical companies to your local sandwich shop. Yet, U.S. innovation is the envy of the world. Companies compete to innovate and make profits; the public sector steps in to stop people getting hurt. To make such a rank exception for the AI industry is nothing less than corporate welfare, and should be treated as such.”

→ Superintelligence Statement hits 100K and counting: We’re thrilled to share that in just over one month, the Superintelligence Statement has amassed 120,000+ signatures from signatories spanning a huge array of backgrounds - including staff from leading AI companies.

If you need a refresher, the Statement calls for “a prohibition on the development of superintelligence, not lifted before there is

broad scientific consensus that it will be done safely and controllably, and

strong public buy-in”.

Sign here if you wish to join the call, and please share!

→ 1st reported case of AI-enabled espionage: Anthropic released a report explaining how Chinese hackers were able to jailbreak Anthropic’s Claude Code AI agent to carry out an 80-90% automated cyberattack against tech companies and government agencies. According to Anthropic, it’s likely the “first documented case of a large-scale AI cyberattack executed without substantial human intervention”. As unprecedented as it is, it’s unfortunately likely just a preview of what’s to come.

Heads Up

Other don't-miss updates from FLI, and beyond.

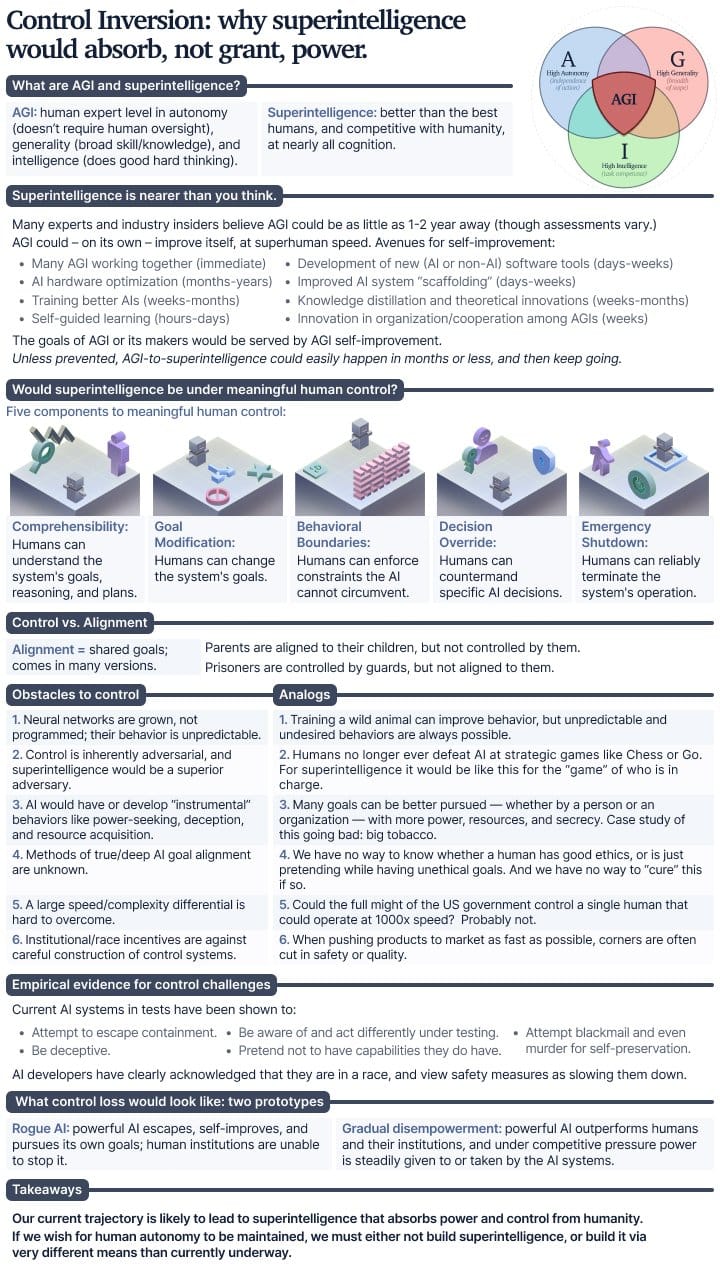

→ Control Inversion: FLI Executive Director Anthony Aguirre released a new study which has him "more convinced than ever" that superintelligence would not be under meaningful human control, on the current development path. Anthony's findings correct the dangerous assumption that the country or company which builds superintelligence first will gain unprecedented power and wealth. Instead, as he lays out in the paper, superintelligence will absorb power from its creators - and put all of humanity at risk.

→ Max at WebSummit: FLI President Max Tegmark went to WebSummit in Lisbon, where he gave a talk on the path to “95% AI” - the helpful Tool AI that the vast majority of the public, when polled, actually wants:

At WebSummit, Max was also interviewed by Forbes and RAZOR about the looming threat of superintelligence:

→ KTFH Creative Contest results incoming: Our $100,000 Keep the Future Human Creative Contest has now closed, and in the next 1-2 weeks we'll be announcing the winning projects. Sign up for notifications on the Keep the Future Human website now to hear when the winners are announced!

→ Genesis Mission: FLI’s AI and National Security Lead, Hamza Chaudhry, joined CNN for a segment discussing President Trump’s new AI Executive Order for the Genesis Mission. Hamza discussed the positive precedent it could set for the development of useful AI tools, along with the need for legislative oversight and guardrails to prevent dual-use risks.

→ Enjoy the Silence: Check out our new video for this holiday season:

→ Is there an AI bubble?: Mark Brakel, FLI’s Director of Policy, continues his new weekly video series “Inside the Machine”. This month, he covered everything from the AI bubble, to New York’s RAISE Act, and a new RAND paper on rogue AI. Follow Mark on LinkedIn to get his newest video in your feed every Thursday.

→ One more month for FLI postdoc fellowships: Applications for our technical postdoc fellowships are due January 5. Don’t forget to apply for this opportunity to receive an annual $80,000 stipend, additional research fund, and extensive networking opportunities.

On the FLI Podcast, host Gus Docker was joined by:

→ Karl Koch, founder of the AI Whistleblower Initiative, to discuss what happens when insiders sound the alarm on AI.

→ Will MacAskill, Senior Research Fellow at Forethought, on his “Better Futures” essay series and why we’re not ready for AGI.

→ Tyler Johnston, Executive Director of the Midas Project, on why OpenAI is trying to silence its critics and how we can hold Big Tech accountable.

We also released two new highlight reels, from Anthony Aguirre’s episode on Keep the Future Human and Nate Soares’ episode on why building superintelligence = human extinction.